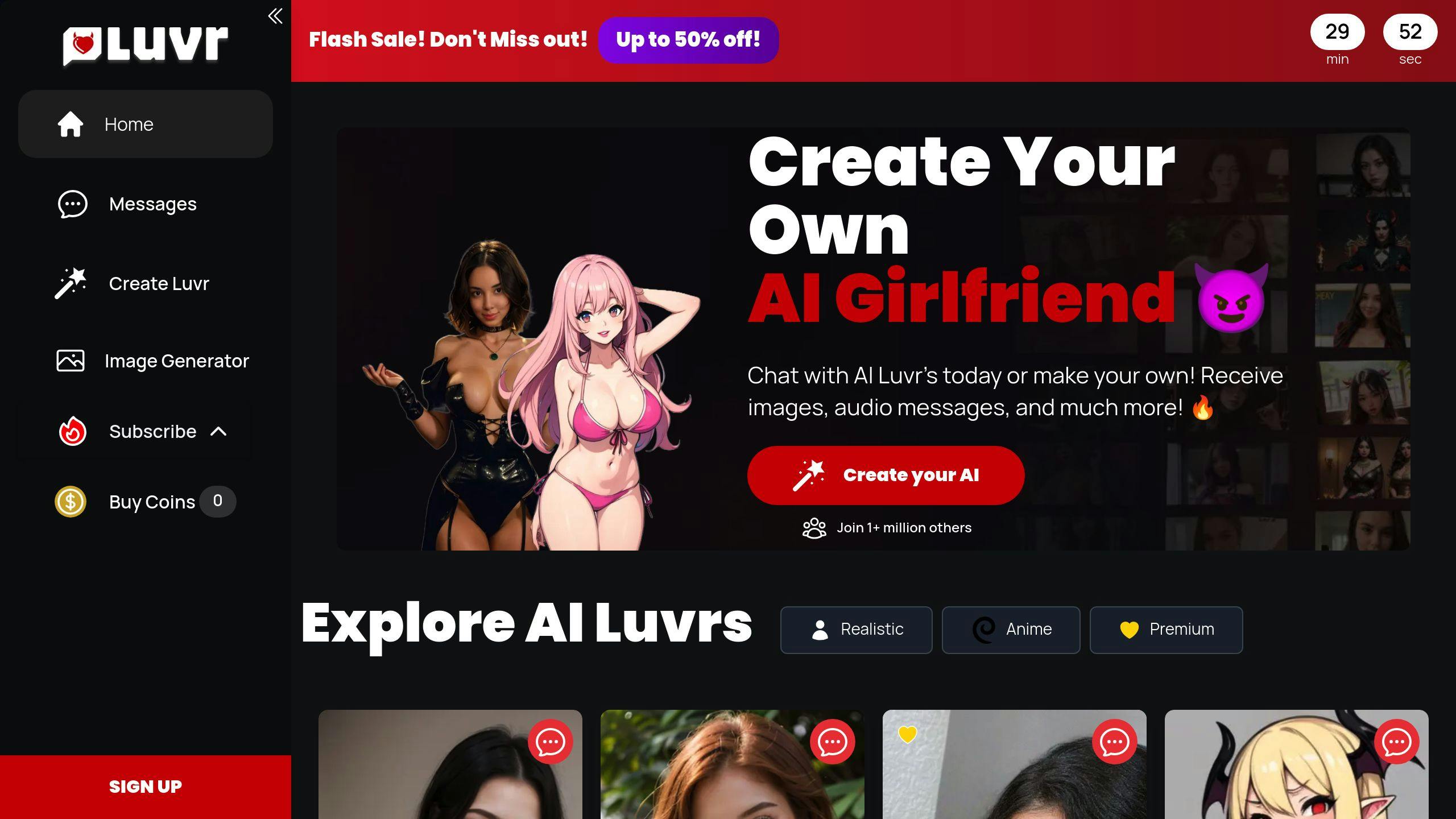

Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

AI Roleplay and Privacy Boundaries

AI roleplay platforms, like Luvr AI and Replika, let users interact with lifelike AI companions. But they also raise serious concerns about privacy and security. Here’s what you need to know:

- Privacy Risks: Users often share sensitive data despite concerns, creating a "privacy paradox."

- Security Measures: Platforms use encryption, multi-factor authentication, and AI moderation, but remain vulnerable to manipulation tactics like "jailbreaking."

- Regulations: Compliance with GDPR and regular audits are key to building trust.

- Ethical Challenges: Balancing user freedom with safety and ethical boundaries is a constant struggle.

Quick Comparison:

| Feature | Luvr AI | Replika |

|---|---|---|

| Data Protection | End-to-end encryption | Encryption and secure storage |

| User Control | Editable, deletable data | Privacy settings available |

| Manipulation Defense | Advanced AI monitoring | AI filtering and moderation |

| Regulatory Compliance | GDPR-compliant | GDPR-compliant |

Platforms must combine strong security, user education, and ethical practices to protect users while ensuring engaging interactions.

DON'T use AI companions apps!

1. Luvr AI: Privacy and Security Features

Luvr AI prioritizes protecting user privacy while fostering an open space for AI roleplay. The platform carefully balances user freedom with strong data protection practices.

To keep user data safe, Luvr AI uses end-to-end encryption for all interactions, including messages, images, and audio. Additional safeguards include multi-factor authentication, AI-powered content monitoring, and the option for anonymous chat sessions.

Luvr AI actively prevents manipulation attempts by employing advanced AI systems that monitor interactions in real-time without compromising user privacy. Users have control over their personal data through clear consent options, the ability to edit or delete information, and transparent privacy policies.

The platform also addresses ethical concerns by combining security features with user education. It regularly updates its protocols to counter emerging threats and meet regulatory requirements. To tackle the "privacy paradox" [2] - where users share sensitive information despite concerns - Luvr AI emphasizes proactive measures and educates users on safe interaction practices.

While Luvr AI’s approach to privacy and security is robust, comparing it to other platforms in the AI roleplay space can provide additional insights into industry standards.

2. Other AI Roleplay Platforms: Privacy and Security Features

In addition to Luvr AI, other platforms in the AI roleplay space also prioritize privacy and security. A great example is Replika, a well-known platform that showcases how providers handle the tricky balance between user freedom and safeguarding privacy.

Shared Security Measures

Most platforms implement core security features similar to Luvr AI. These include encryption, authentication, and AI moderation to protect user interactions. However, the real difference lies in how each platform tackles challenges like "jailbreaking", where users try to manipulate AI systems. To address this, many platforms use advanced AI tools to identify and block such manipulative attempts [1].

| Security Feature | Implementation Approach | Purpose |

|---|---|---|

| Content Moderation | AI-powered filtering systems | Identify and block harmful content |

| Data Storage | Encrypted servers with controls | Secure user data |

| Authentication | Multi-factor verification | Prevent unauthorized access |

| Ethical Controls | Custom rule engines | Limit generation of restricted content |

Regulatory and Ethical Standards

To comply with regulations like GDPR, platforms emphasize transparency and give users control over their data. They also focus on minimizing data collection and ensuring user anonymity. Regular security audits and penetration testing further reinforce the platform's reliability and security.

Adapting to Change

The industry is constantly evolving, introducing AI tools aimed at ensuring ethical interactions while still allowing users creative freedom. These advancements are designed to address new threats and respond to user needs, aiming for a balance between safety and user expression [4].

While these platforms share many of the same security foundations, their success in earning user trust and handling challenges varies, highlighting both their strengths and areas for improvement.

sbb-itb-f07c5ff

Strengths and Weaknesses of AI Roleplay Platforms

AI roleplay platforms face a balancing act between ensuring user privacy and security while delivering engaging and flexible interactions. Here's a closer look at how these platforms manage these challenges.

| Aspect | Strengths | Weaknesses |

|---|---|---|

| Privacy and Security Measures | - End-to-end encryption - Multi-factor authentication - AI content moderation - Regular security audits - User data anonymization |

- Susceptibility to AI manipulation - Risk of unauthorized access - Difficulty in detecting threats - Limited control over data retention |

| User Freedom | - Personalized character creation - Flexible interaction options - Customizable experiences |

- Possibility of inappropriate content - Ethical concerns - Challenges in maintaining boundaries |

Platforms are employing tools like custom rule engines to detect and block restricted content while keeping interactions dynamic and user-friendly [1]. For example, services such as Luvr AI aim to strike a balance between allowing creative expression and enforcing safety measures.

To address privacy concerns, many platforms now let users adjust privacy settings, giving them greater control over their data [1]. However, vulnerabilities like jailbreaking continue to pose challenges, pushing platforms to innovate their security approaches constantly [3].

Conclusion

AI roleplay platforms face the challenge of balancing user freedom with data security. To succeed, these platforms need to adopt strong security practices while ensuring an engaging and enjoyable user experience.

Custom rule engines and advanced filtering systems play a key role in preventing misuse without stifling creativity [1]. For example, platforms like Luvr AI show how customizable security settings can allow users to manage their data sharing while keeping the platform safe and functional.

The "privacy paradox" highlights a critical issue: users often share sensitive data despite being concerned about privacy [2]. To tackle this, platforms should combine technical safeguards like encryption and AI moderation with tools that empower users - such as adjustable privacy settings and transparent consent processes. Compliance with regulations like GDPR and regular audits further builds trust by promoting accountability [4].

Ethical guidelines are just as important as technical and legal measures in maintaining user trust. The future of AI roleplay depends on platforms adopting security measures, educating users, and being transparent about their operations. This way, they can offer a safe space for users while protecting their personal data [4][5].

The industry needs to go beyond technical solutions and focus on comprehensive user protection. A mix of advanced security, user education, and ethical practices will be key to ensuring the long-term success and trustworthiness of AI roleplay platforms.

FAQs

Here are some answers to common questions about privacy and safety when using AI roleplay platforms.

Is Replika private?

Replika states that it does not share user conversations, photos, or other content with advertising partners or use them for marketing purposes. The platform uses encryption and secure storage to safeguard user data. However, users should take extra steps to protect their own information:

- Regularly review privacy settings.

- Avoid sharing sensitive personal information in conversations.

- Familiarize yourself with the platform's data collection practices.

Are romantic AI apps safe?

Privacy reviews have raised concerns about romantic AI apps. Mozilla's research identified 11 such apps with questionable privacy practices, including excessive data collection, unclear sharing policies, and weak security measures [2][3].

To stay safe while using these apps:

- Carefully read and understand their privacy policies.

- Use any available privacy-enhancing features.

- Share only minimal personal information.

These concerns highlight the importance of clear policies and stronger security in the AI roleplay space.