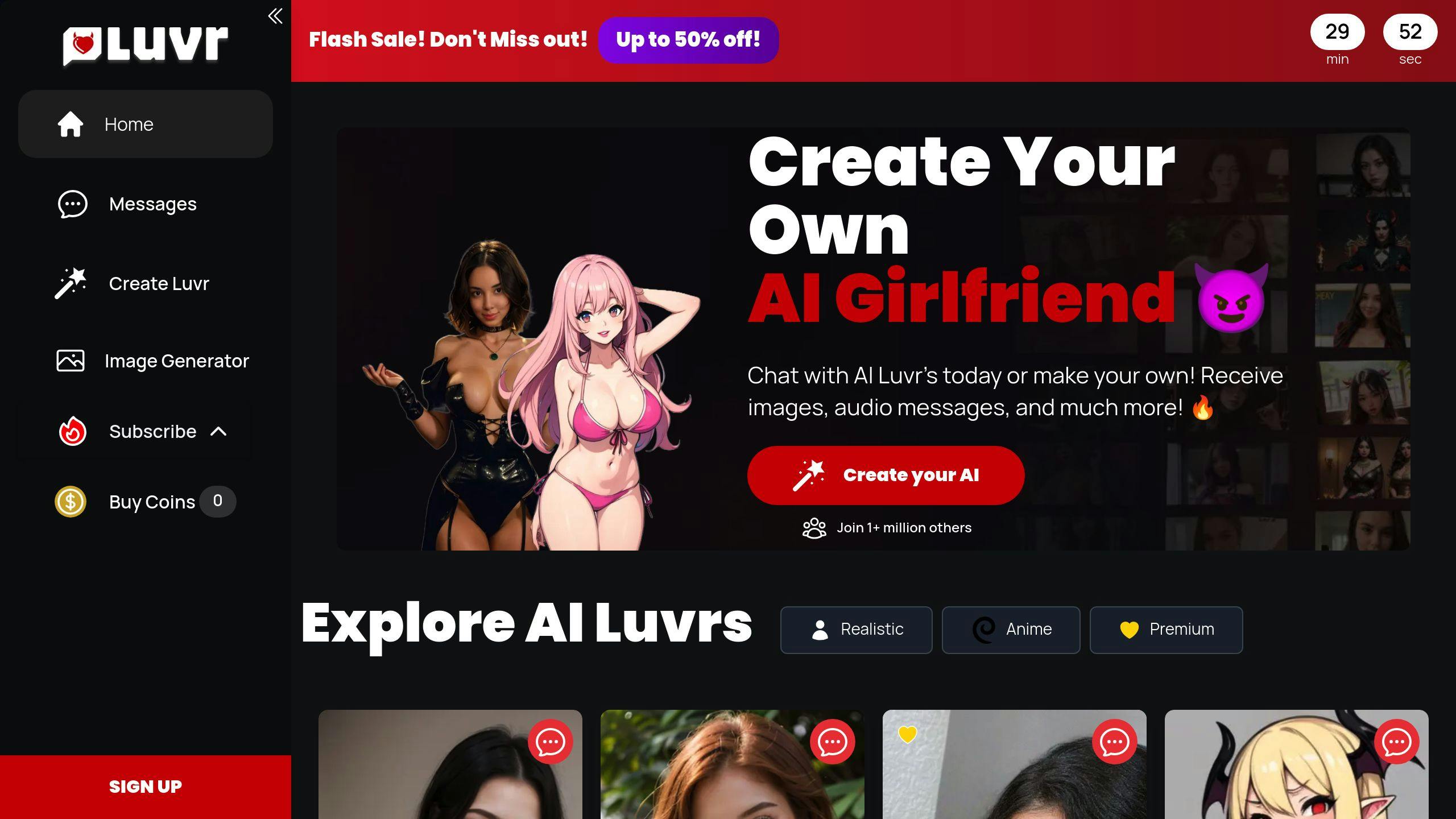

Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

Ethical AI Roleplay: User Guidelines

AI roleplay platforms like Luvr AI and Character AI are transforming human-AI interactions, but they also bring ethical challenges. Here’s what you need to know:

- Key Issues: Data privacy, content safety, and platform misuse are top concerns.

- Platform Differences:

- Luvr AI: Focuses on creative freedom but lacks strong ethical safeguards.

- Character AI: Prioritizes safety with strict moderation but limits flexibility.

- Proposed Solutions: Stronger data policies, real-time moderation, and transparent user consent systems.

Quick Comparison:

| Platform | Strengths | Weaknesses |

|---|---|---|

| Luvr AI | Customizable characters, freedom | Weak ethical standards, minimal transparency |

| Character AI | Strong moderation, clear rules | Limited storytelling flexibility |

| DreamGen | Balanced approach, storytelling | Basic encryption, limited AI transparency |

Balancing user freedom with ethical safeguards is critical for the future of AI roleplay platforms.

1. Ethical Practices on Luvr AI

Transparency

Luvr AI needs to clearly explain how its AI-generated responses work and how user data influences these interactions [5]. Providing this clarity is crucial for building trust. While the platform has taken some steps toward safeguarding user privacy, more detailed disclosures are necessary.

Data Privacy

Luvr AI employs several measures to protect user data. Here's a breakdown of key privacy practices:

| Privacy Feature | Implementation |

|---|---|

| Data Collection | Anonymous data gathering |

| User Control | Tools for managing personal data |

| Storage Security | Encrypted chat histories |

| Data Deletion | Option to delete user information |

These measures aim to ensure user safety while respecting individual privacy.

User Safety

Protecting users is a top priority, but there’s room for improvement. While Luvr AI offers private and secure chats, it should strengthen its safety protocols [3]. Suggested improvements include:

- Adding crisis resources and easier reporting tools

- Strengthening content moderation systems

- Creating clear processes for addressing user concerns

Another challenge lies in addressing biases that might lead to harmful interactions, which ties into the next point.

Bias Management

Preventing biased interactions is essential for creating an inclusive platform. To minimize unfair outcomes, Luvr AI should regularly audit its training data, ensure diverse representation, and involve a variety of perspectives during development [5]. This helps reduce the risk of exclusionary or harmful interactions.

Informed Consent

Informed consent is critical for protecting user rights. Luvr AI should provide clearer explanations about how user data is used and obtain explicit consent before collecting personal information [3]. Strengthening these consent protocols would reinforce transparency efforts.

Luvr AI’s current ethical framework highlights both progress and challenges. While the platform offers engaging AI companionship features, it must strike a balance between creative freedom and stronger ethical safeguards [2]. Prioritizing user protection without compromising the interactive experience is key.

2. Ethical Practices on Other Platforms

Transparency

Platforms like Character AI and TavernAI prioritize clear communication about how AI responses are generated and how user data is handled. Their documentation provides users with a better understanding of these processes [3].

Data Privacy

Each platform takes its own path to safeguard user data:

| Platform | Privacy Measures |

|---|---|

| Character AI | End-to-end encryption |

| TavernAI | Anonymous data collection |

| OpenAI | Compliance with GDPR standards |

User Safety

While Luvr AI leans into creative freedom, platforms such as Character AI take a stricter approach with tools for content moderation and reporting. These systems help identify and filter harmful interactions as they happen [3][6].

Bias Management

Addressing bias is a shared priority across platforms. For example:

- Character AI uses real-time filtering to minimize biased outputs.

- OpenAI incorporates bias checks into regular algorithm updates [1][3].

Efforts to reduce bias often include diverse training datasets, regular updates to algorithms, and continuous monitoring of outputs.

Industry Standards

Ethical guidelines are becoming a cornerstone of AI roleplay platforms. These standards aim to ensure positive and consensual interactions. Many platforms rely on safety protocols and moderation systems to align with these principles [4].

Continuous Improvement

Platforms are constantly refining their safety measures. Real-time monitoring, reporting tools, and algorithm updates are frequently used to address challenges. These updates not only improve safety but also help maintain ethical boundaries while delivering engaging AI experiences [1][3].

AI Ethics & Security: Your Guide to Safe Business Integration

sbb-itb-f07c5ff

Strengths and Weaknesses of Each Platform

This section takes a closer look at how different platforms handle the balance between creative freedom and safety:

| Platform | Strengths | Weaknesses |

|---|---|---|

| Character AI | • Strong moderation • Clear guidelines • Bias filtering |

• Strict content restrictions • Limited storytelling flexibility |

| DreamGen | • Balances freedom and safety • Clear boundaries • Focus on storytelling |

• Basic encryption • Limited AI transparency |

| Luvr AI | • Offers creative freedom • Customizable characters |

• Weak ethical standards • Minimal transparency |

Transparency is a key differentiator among platforms. Character AI stands out by providing detailed disclosures about how its AI operates. On the other hand, Luvr AI offers limited information on data handling and consent, which raises concerns about user trust and platform accountability [2].

Privacy measures also vary widely. Character AI prioritizes encryption to secure user data, while Luvr AI leans more toward offering user control tools. These differing strategies highlight each platform's focus - whether it's on security or ease of use [3].

Addressing bias is another challenge. Character AI actively tackles this through real-time filtering, aligning with industry recommendations to minimize algorithmic bias. As experts emphasize:

"Ethical guidelines stress the need to identify and remove biases in training data and algorithms" [1]

When it comes to safety, platforms take different approaches. Character AI relies on automated systems to ensure protection, while Luvr AI places greater emphasis on user freedom, often at the expense of safety measures [2][3]. These contrasting methods have a direct impact on user experiences and the level of protection offered.

This comparison highlights the constant push and pull between innovation and responsibility. How each platform navigates these challenges will shape the ethical landscape of AI roleplay and influence user rights in the future [5].

Final Thoughts

This section dives into the ethical challenges and practical steps needed for both users and developers in the world of AI roleplay.

AI roleplay brings a mix of opportunities and challenges, especially when it comes to balancing user safety and creative freedom. Platforms currently differ widely in how they address these ethical concerns, leaving room for improvement.

Here are three key areas that need attention:

| Focus Area | Current Challenge | Proposed Solution |

|---|---|---|

| User Privacy | Risk of data breaches | Regular security updates and clear data policies |

| Content Safety | Harmful interactions | Real-time moderation tools and easy reporting options |

| Platform Accountability | Lack of consistent guidelines | Public ethical audits and measurable safety standards |

Developers have a responsibility to ensure a safe and smooth user experience. This means rolling out regular security updates, offering clear guides on how the AI behaves, and publicly sharing ethical audits to build trust.

Users also play a role by crafting thoughtful prompts, following platform rules, and reporting any problematic behavior. The goal is to foster meaningful interactions while staying mindful of the potential consequences in real-world scenarios.

Experts suggest that ethical considerations will become even more important in the coming years. By 2025, platforms with strong ethical foundations are expected to dominate 70% of the market share [3].

The challenge lies in finding the right balance between allowing creative freedom and promoting responsible use. As these technologies evolve, maintaining this balance will be essential for the growth and success of AI companionship platforms.

FAQs

What are the ethical tensions in human AI companionship?

Human-AI relationships bring up three main ethical challenges:

| Ethical Tension | Description | Key Consideration |

|---|---|---|

| Companionship-Alienation Irony | AI companionship might reduce human connections | Balance AI interactions with real relationships |

| Autonomy-Control Paradox | Users need to balance control with ethical boundaries | Ensure responsible customization |

| Utility-Ethicality Dilemma | Balancing user freedom with ethical concerns | Follow platform guidelines |

"Responsible AI usage minimizes negative consequences." - GoDaddy Blog [1]

Platforms like Luvr AI and Character AI tackle these challenges by offering varying levels of freedom and safety features [2]. To navigate these issues, users should:

- Familiarize themselves with platform rules and limitations

- Be cautious when sharing sensitive information

- Report harmful content without delay

Using AI responsibly means balancing creativity with accountability, while keeping the broader impacts in mind [1][3]. Both users and platforms must work together to create interactions that are engaging yet ethical.