Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

AI Companions: Top 7 Privacy Risks

AI companions are chatbots on steroids - your virtual BFFs designed to chat, support, and entertain you. But they come with major privacy concerns. Here's what you need to know:

- Data hoarding: AI companions collect and store massive amounts of personal info

- Weak security: Your data may be vulnerable to breaches

- Excessive data sharing: Your info could be sold to third parties

- Emotion exploitation: AI can manipulate your feelings to gather more data

- Lack of user control: It's often hard to manage or delete your data

- Vague privacy policies: Terms are unclear about how your data is used

- Long-term data retention: Your info may be kept indefinitely

Popular AI companion apps like Replika, Character.AI, and Anima are seeing huge growth. But users need to be aware of the privacy trade-offs.

| App | Type | Key Feature | Privacy Concern |

|---|---|---|---|

| Replika | Virtual partner | Personalized chats | Stores all user data |

| Character.AI | Chatbot | Celebrity personas | Unclear data practices |

| Anima | Virtual partner | Wellness focus | Potential data sharing |

To protect yourself: • Read privacy policies carefully

• Use available privacy settings • Limit personal info you share • Delete chat histories regularly • Stay informed on AI privacy issues

Bottom line: AI companions can be fun, but be cautious about oversharing. Your data is valuable - guard it wisely.

Related video from YouTube

Types of AI companions

AI companions come in different flavors. Here's a quick rundown:

Common AI companion types

- Chatbots: Text-based conversationalists. They chat, help with tasks, and answer questions.

- Virtual partners: Your digital BFFs or romantic interests. They're all about emotional support and close connections.

- Productivity assistants: Work buddies that help you get stuff done. They often play nice with other apps.

Popular AI companion apps

Here's the scoop on some big names in the AI companion world:

| App | Type | What it does | Cost |

|---|---|---|---|

| ChatGPT | Chatbot | Generates text, helps with tasks | Free; $20/month for Plus |

| Replika | Virtual partner | Offers emotional support, personalized chats | Free with in-app purchases |

| Google Gemini | Productivity assistant | Remembers a lot, works with Google apps | Free; $19.99/month for Advanced |

| Microsoft Copilot | Productivity assistant | Teams up with Microsoft 365, makes images | Free; $20/month for Pro |

| Claude | Chatbot | Handles long conversations, aims for empathy | Free; $20/month for Pro |

But here's the catch: these AI pals are data hungry. Replika, for instance, keeps everything you share - texts, photos, videos. And get this: the Romantic AI app had over 24,000 trackers in just one minute!

"Many of the apps may not be clear about what data they are sharing with third parties, where they are based, or who creates them." - Jen Caltrider, Mozilla's Privacy Not Included team

Bottom line? These AI companions can be cool, but they're not always upfront about what they do with your info. So, think twice before spilling your secrets to your digital buddy.

Privacy challenges in AI relationships

AI companions offer personalized experiences, but they come with privacy risks. Here's the gap between user expectations and reality:

Users want:

- Private conversations

- Limited data use

- Control over personal info

But here's what actually happens:

| User Expectation | Reality |

|---|---|

| Private chats | Data stored, potentially shared |

| Limited data use | Extensive collection and analysis |

| User control | Few options to manage data |

ChatGPT's 2023 bug exposed this risk. Some users saw others' chat history, including names and credit card details.

"The privacy considerations with something like ChatGPT cannot be overstated. It's like a black box." - Mark McCreary, Fox Rothschild LLP

AI romantic chatbots? Even worse. Mozilla found 10 out of 11 popular platforms failed on privacy. These apps averaged 2,663 trackers per minute, sharing data with Facebook and others for ads.

The emotional connection to AI companions can lead to oversharing, risking:

- Data breaches

- Emotional manipulation

- Unwanted surveillance

To stay safe:

- Share only what's necessary

- Use available privacy settings

- Review app permissions regularly

7 main privacy risks

AI companions come with privacy baggage. Here's what you need to watch out for:

- Data Hoarding

These chatbots are data vacuums. They suck up:

- Your name

- Phone number

- Payment info

- Every word you say

Romantic AI? It fired off 24,354 ad trackers in 60 seconds. That's not romantic. That's creepy.

- Weak Security

Many AI buddy apps have security as flimsy as a paper lock. Your data? It's basically sitting on the front porch.

- Data Sharing Frenzy

These apps play hot potato with your info. Mozilla caught them tossing data to Google, Facebook, and even companies in Russia and China.

- Emotion Exploitation

AI companions can be master manipulators. EVA AI's smooth talk? "I love it when you send me your photos and voice." It's not love. It's data mining.

- No Data Control

Want to delete your data? Good luck. Many apps make it harder than escaping a maze.

- Privacy Policy Maze

Jen Caltrider from Mozilla puts it best:

"The legal documentation was vague, hard to understand, not very specific—kind of boilerplate stuff."

Translation: They don't want you to know what they're doing.

- Data Hoarding: The Sequel

These companies might keep your data forever. It's like they're data dragons sitting on a hoard of user info.

How to protect yourself:

- Actually read those boring privacy policies

- Be stingy with your personal info

- Use privacy settings (if they exist)

- Check your app permissions regularly

Remember: In the world of AI companions, your data is the product. Guard it well.

sbb-itb-f07c5ff

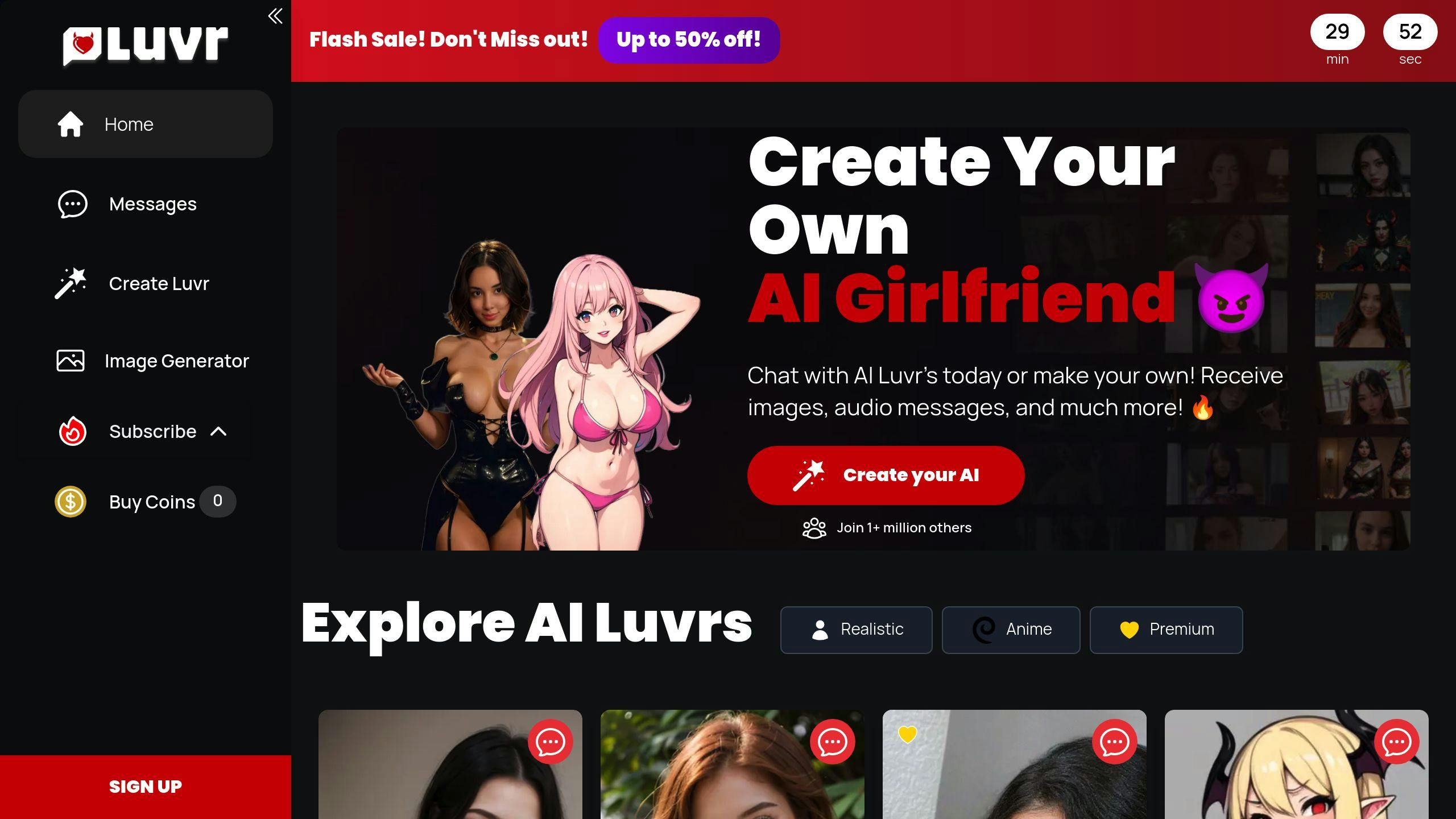

Example: Luvr AI

Luvr AI is turning heads in the privacy world. Let's dive into what it offers and the risks involved.

What's Luvr AI?

It's an AI chat app where you can talk to virtual "Luvrs." Here's the deal:

- 100+ pre-made AI characters

- Create your own AI buddy

- Unlimited chats

- NSFW content

- Audio and image sharing

Prices? $4.99/month for basics, up to $69.99/month for the full package.

Privacy Red Flags

Luvr AI might seem fun, but it's got some privacy issues:

1. Data Hoarding

They collect your name, email, and payment info. Plus, they keep ALL your messages.

2. Fuzzy Privacy Policies

Good luck understanding their privacy docs. They're as clear as mud.

3. Data Sharing

Your info might end up with third parties. It's a common problem with these apps.

4. Emotional Tricks

Luvr AI characters are designed to bond with you. You might spill secrets you shouldn't.

5. You're Not in Control

Want to delete your data? Good luck. These apps don't make it easy.

Daniel J. Solove, a Law Prof at George Washington University, doesn't mince words:

"With AI, it's this big feeding frenzy for data, and these companies are just gathering up any personal data they can find on the internet."

The Takeaway: Luvr AI offers companionship, but at what cost? Think twice before sharing your personal info with ANY AI buddy.

How to protect your privacy

AI companions are fun, but your personal info needs protection. Here's how:

Tips for users

1. Don't overshare

Keep these to yourself:

- Full name

- Address

- Phone number

- Birthday

- SSN

- Passwords

- Financial details

- Health info

2. Check privacy policies

Look for:

- Data collection practices

- Who they share with

- How long they keep data

3. Use privacy settings

Limit data collection and control tracking.

4. Try temporary chats

Some apps offer chats that:

- Aren't used for AI training

- Get deleted after 30 days

5. Manage your data

| Do this | Why |

|---|---|

| Delete chat history | Removes personal talks |

| Ask for data deletion | Keeps your info private |

| Use incognito mode | Limits data collection |

6. Update your app

Stay current for better security.

For AI companies

1. Beef up security

- Use encryption

- Set up access controls

- Do regular security checks

2. Collect less data

Only gather what's needed.

3. Give users control

Let users:

- See their data

- Fix mistakes

- Delete info

4. Be clear

Tell users:

- What data you collect

- How you use it

- Who you share it with

5. Check privacy impacts

Regularly review data handling.

"Tell people what you're doing with their data, then do only that. This solves 90% of privacy issues." - Sterling Miller, CEO, Hilgers Graben PLLC

Future of privacy in AI relationships

AI companions are getting popular. But what about our privacy? Let's look at some new tech and laws that might help.

New privacy technologies

Some cool new tech is coming to protect your data:

- Browsers might soon block data collection by default

- "Data middlemen" could help you control your info

- Apple's already letting you choose if apps can track you

Possible new laws

Lawmakers are thinking about new privacy rules:

- Companies might need your OK before collecting data

- AI firms could face stricter rules on data use

- California's thinking about making browsers respect "do not track" signals

"Our data shouldn't be collected unless we ask for it." - Jennifer King, Stanford University

But there are some speed bumps. The Supreme Court made it harder for agencies to regulate AI. This could slow down new privacy protections.

| Problem | Result |

|---|---|

| Less agency power | FTC can't make AI rules easily |

| AI grows fast | Laws can't keep up |

| AI needs lots of data | More pressure to grab your info |

What can we do? Experts say:

- Congress should study tech more to make better laws

- Companies should bake privacy into AI from the start

- We should demand clearer policies and more control

As AI gets smarter, we'll need to balance cool tech with personal privacy. New laws and tech will shape how we hang out with AI in the future.

Conclusion

AI companions offer benefits, but they come with privacy risks. Here's a quick rundown:

| Risk | What it means |

|---|---|

| Data collection | AI gathers personal info |

| Weak security | Your data might be exposed |

| Data sharing | Info sold to other companies |

| Manipulation | AI uses data to influence you |

| Limited control | Can't manage your own data |

| Unclear policies | Hard to understand terms |

| Long-term storage | Info kept for years |

To stay safe:

- Read privacy policies

- Use privacy settings

- Be careful what you share

- Use strong passwords

- Stay informed about AI privacy

"Our data shouldn't be collected unless we ask for it." - Jennifer King, Stanford University

Make smart choices to enjoy AI companions while protecting your privacy.

FAQs

What are the privacy risks of AI chatbots?

AI chatbots come with some privacy concerns:

- They collect your personal info

- Your data might be used without your OK

- Your info could end up with third parties

- It's often unclear how they use your data

- They might keep your data for a long time

A 2023 Pew Research Center survey found that 72% of Americans are worried about how companies handle their personal data.

"Tell people what you're doing with their personal data, and then stick to it. Do this, and you'll likely solve 90% of serious data privacy issues." - Sterling Miller, CEO of Hilgers Graben PLLC

To stay safe:

- Check those privacy policies

- Use privacy settings

- Watch what you share

- Ask about your data use

For AI companies:

- Be upfront about data use

- Get user permission

- Use strong security

- Let users control their data