Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

AI Bias: Gender Norms in Digital Love

AI systems often reinforce outdated gender stereotypes, especially in digital companions. These biases stem from training data and design choices, shaping how AI interacts with users and society. Here's what you need to know:

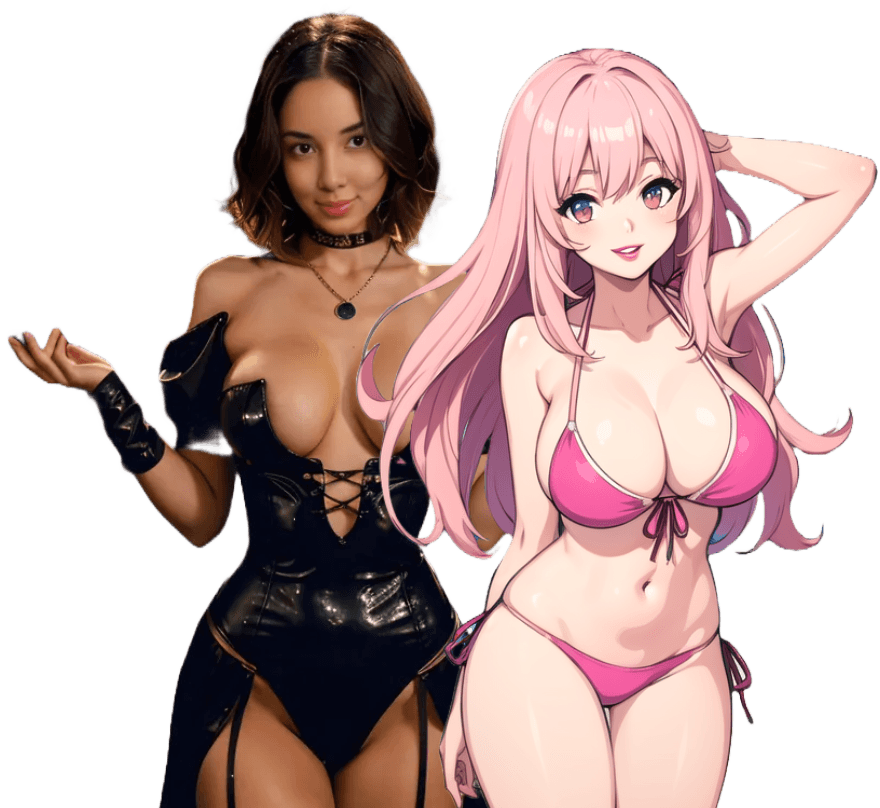

- The Problem: AI companions often follow traditional gender roles, limiting diversity and reinforcing stereotypes. For example, platforms like Luvr AI offer characters with predefined roles such as "Slutty Goth Friend" or "Gym Girl", which reflect societal biases.

- Why It Matters: Gender-biased AI can normalize harmful stereotypes, impact mental health, and shape societal norms in negative ways.

- Solutions:

- Use diverse training data and tools to detect bias.

- Create gender-neutral language and customizable AI interactions.

- Educate users on AI biases and provide feedback tools.

Addressing AI gender bias requires better design, ethical guidelines, and user education. By allowing more customization and promoting balanced interactions, platforms can challenge outdated norms and foster inclusive digital relationships.

Gender Bias in AI Systems

How AI Systems Learn Gender Stereotypes

AI systems pick up gender stereotypes from the data they're trained on, reflecting the biases present in society. This process can embed these stereotypes into the system, causing them to surface in the AI's responses and interactions. Over time, this reinforces existing patterns of bias. The way training data is selected and prepared plays a big role in shaping how AI companions understand and communicate about gender. When historical data filled with stereotypes is used, it perpetuates these biases.

Common Gender Patterns in AI Companions

AI companions often follow predefined character roles that align with traditional gender expectations. For example, Luvr AI's offerings highlight this issue:

"Luvr AI offers AI companions with specific stereotypical roles, such as 'Your Slutty Goth Friend Ember,' 'Gym Girl Lauren,' and 'Your Best Friend's Mom Delicia'"

These roles reflect and reinforce common gender stereotypes, limiting the range of personality traits and behaviors AI companions can exhibit.

Global Effects of AI Gender Bias

The influence of gender bias in AI companions extends beyond individual users, shaping broader societal norms. These biases can reinforce cultural stereotypes, make stereotypical behavior seem normal, and uphold problematic social expectations.

"The design of AI companions often reflects and reinforces existing societal biases, potentially exacerbating inequalities."

Tackling these challenges is key to creating AI companions that encourage more balanced and thoughtful views of gender roles. These issues underline the importance of addressing the broader consequences of gender-biased AI relationships.

How Will AI Impact Gender Bias?

Effects of Gender-Biased AI Relationships

Gender bias in AI design doesn't just affect the technology itself - it impacts how users think and interact, as well as broader societal norms.

Reinforcing Gender Stereotypes

AI companions often reflect and amplify traditional gender roles. For example, Luvr AI companions are frequently designed with traits that align with conventional stereotypes. This programming can normalize outdated ideas about gender, making it harder to challenge these societal patterns.

Impact on Mental Health

When AI companions reinforce outdated gender roles, they can create unrealistic expectations for real-world relationships. This disconnect may lead to feelings of isolation or anxiety, especially for users who rely heavily on these interactions. The psychological toll highlights the deeper problems with biased AI design.

Ethical Challenges in AI Design

The ethics of creating gendered AI companions go beyond individual users - they influence how society views gender roles as a whole. The way these systems are built and marketed plays a role in shaping public attitudes. Developers must carefully consider how their design choices affect long-term perceptions of gender. Establishing clear ethical guidelines is crucial for promoting balanced representation and ensuring responsible character development. These concerns open the door to practical solutions.

sbb-itb-f07c5ff

Fixing Gender Bias in AI Systems

Addressing gender bias in AI systems requires a combination of technical solutions and social understanding. These approaches directly tackle the issues discussed earlier.

Improving AI Training Data

- Diverse Data Sources: Use training data that reflects a wide range of cultural backgrounds and gender identities.

- Tools for Detecting Bias: Implement automated systems to identify and flag gender stereotypes in data.

- Frequent Data Reviews: Conduct regular audits to spot and correct recurring bias in AI responses.

In addition to better data, setting clear design standards helps solidify these improvements.

Establishing AI Design Standards

| Principle | How It's Applied | Expected Benefit |

|---|---|---|

| Gender-Neutral Language | Use automated tools to flag and replace gendered terms | Minimizes stereotypical language |

| Varied Personality Traits | Randomize traits assigned to AI personas | Promotes diverse and balanced character profiles |

| Customizable Interactions | Allow users to adjust communication styles | Ensures respectful and tailored experiences |

Educating Users

Educating users plays a critical role in reducing bias over time.

-

Awareness Training

Teach users how AI systems operate and how biases can influence interactions. Include updates on system changes and tips for promoting fair engagement. -

Feedback Tools

Provide simple ways for users to report biased behavior, encouraging collaboration in improving the system. -

Community Guidelines

Set clear expectations for users, emphasizing the importance of respectful interactions. Highlight AI as a tool for learning and growth, not for reinforcing harmful stereotypes.

Luvr AI: Tackling Gender Bias

Luvr AI promotes personalized digital relationships that break away from conventional gender norms. By combining thoughtful design with a range of support resources, the platform offers practical ways to address gender bias in digital interactions. Here's a closer look at how Luvr AI achieves this.

Character Creation Features

Luvr AI's character creation tools let users design AI companions with a variety of traits, appearances, and backstories. This flexibility allows users to build personalities and narratives that move beyond traditional gender roles, encouraging more balanced and meaningful interactions.

In addition to these customization options, the platform provides resources to help users maintain fair and respectful digital relationships.

Support and Educational Tools

The platform includes a detailed FAQ section packed with educational materials and tips for fostering balanced interactions. These guides help users navigate the process of building equitable digital relationships, offering practical advice for avoiding gender stereotypes and promoting fairness in their AI connections.

Conclusion

Tackling AI gender bias requires both technical advancements and strict ethical standards. As AI systems continue to develop, it's crucial to focus on fostering digital interactions that challenge outdated gender norms rather than reinforcing them.

Allowing users to customize AI characters can play a key role in this effort. By offering control over character design and relationship dynamics, platforms can encourage more balanced and fair interactions. When paired with clear user guidelines and educational resources, this approach can help reduce stereotypical gender portrayals.

Collaboration between users, developers, and platforms will be essential for future progress. User feedback, in particular, is critical for refining systems and ensuring continuous improvement. Providing accessible support resources can also help users navigate these digital relationships responsibly while staying informed about potential biases.

Reducing gender bias in AI is an ongoing process that requires thoughtful design and active engagement. As technology evolves, prioritizing balanced gender representation will be key to fostering meaningful and inclusive digital experiences.