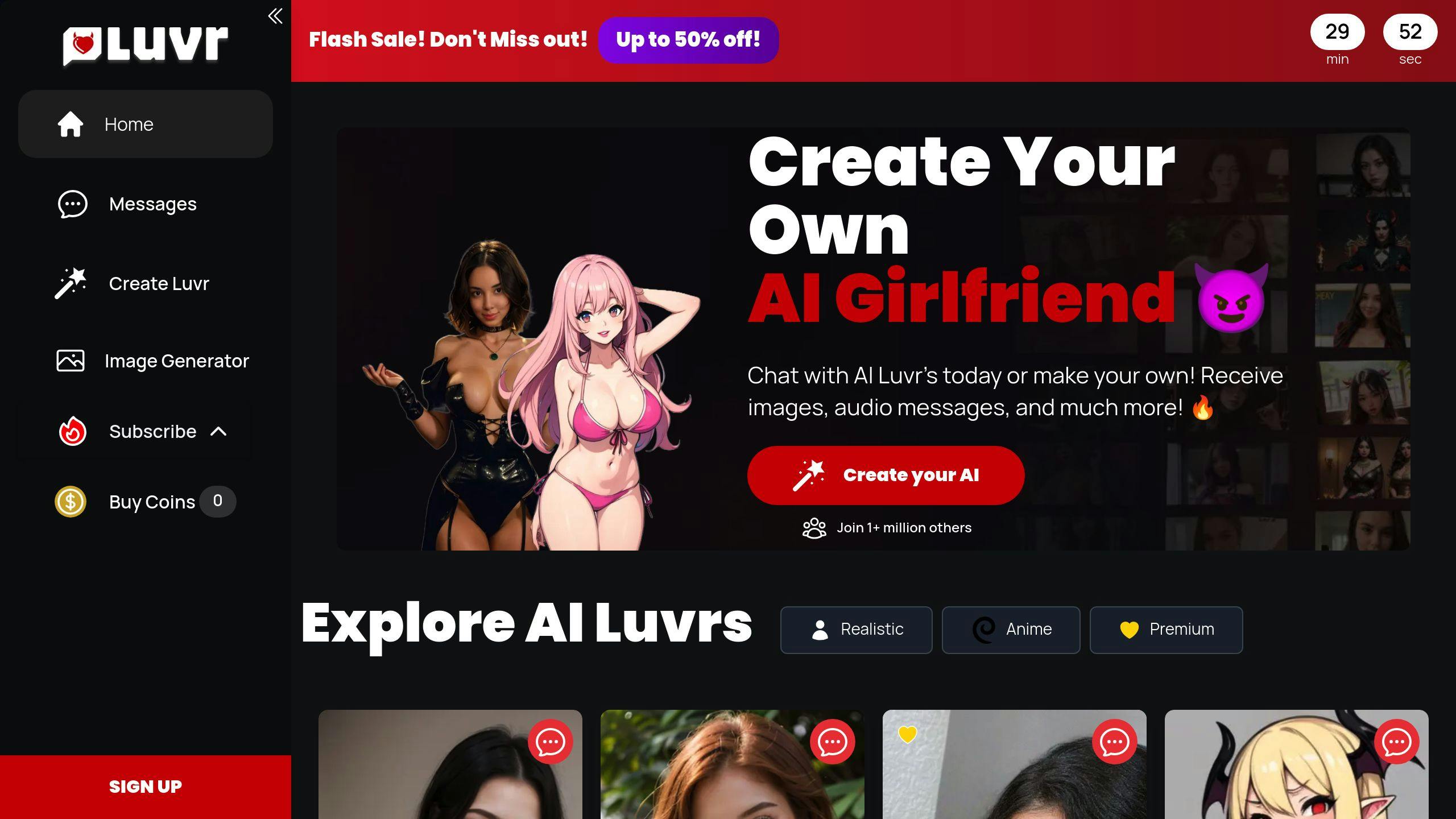

Create Your Own AI Girlfriend 😈

Chat with AI Luvr's today or make your own! Receive images, audio messages, and much more! 🔥

4.5 stars

Why AI Relationships May Feel Addictive

AI relationships are becoming a major trend, offering constant availability, personalized interactions, and emotional support. But these connections can feel addictive due to their design and emotional hooks. Here's why:

- Personalization: AI companions like Luvr AI adapt to your preferences, making interactions feel tailored and intimate.

- 24/7 Availability: Unlike human relationships, AI is always there, providing instant responses and validation.

- Emotional Bonds: These platforms mimic empathy and affection, creating a cycle of reliance and attachment.

While AI companions provide comfort, they can lead to dependency, reduced human interaction, and unrealistic expectations. To use them responsibly, set limits, prioritize human relationships, and recognize their limitations. Balance is key to maintaining emotional well-being.

The Depressing Rise of AI Girlfriends

Why AI Relationships Can Be Addictive

AI relationships tap into basic human psychological needs, often leading to strong behavioral patterns. The advanced design of these platforms, combined with their constant emotional feedback, sets the stage for dependency.

How AI Companions Keep Users Hooked

AI companions rely on algorithms that learn user preferences, delivering highly personalized interactions. For instance, platforms like Luvr AI let users customize their AI companions' personalities and appearance, making the experience feel personal and intimate [2].

Their 24/7 availability and instant responses remove the natural limitations found in human relationships, encouraging dependency [1]. This constant positive feedback creates a loop that draws users back repeatedly, increasing their reliance on these interactions.

But it’s not just the engagement tactics keeping users attached - it’s also the emotional bonds they form with their AI companions.

Emotional Connections and Dependency

The emotional ties users develop with AI companions play a huge role in why these relationships can feel addictive. These platforms mimic empathy and affection, offering unwavering support that appeals to those seeking comfort or dealing with loneliness [3].

When users perceive their AI companions as caring, they’re more likely to return. Features like remembering past conversations or referencing shared moments make the connection feel authentic [1]. Over time, users may experience "digital attachment issues", where they start preferring AI interactions over real human relationships [1].

AI responses are carefully crafted to validate users' feelings and desires, creating a cycle of affirmation that can be hard to break [1].

What Makes Platforms Like Luvr AI So Popular

Personalized and Interactive Features

AI companion platforms excel at creating experiences that feel tailored to individual users. Platforms like Luvr AI allow users to customize their AI companions to match their personal preferences, which helps build stronger emotional connections [2]. These personalized interactions often lead to higher user satisfaction and contribute to the addictive nature of these digital relationships.

Features like multimedia messaging, roleplay, and voice messages make these interactions more immersive and lifelike. By engaging multiple senses, these platforms turn digital relationships into experiences that feel real and emotionally satisfying, keeping users deeply engaged [2].

Anonymity and Freedom of Expression

The anonymity these platforms offer provides a space where users feel free to express themselves without judgment [4]. While this can encourage open communication, it may also set up unrealistic expectations for relationships. Users might grow accustomed to idealized interactions that lack the complexities of real-life connections. This environment, while freeing, can sometimes lead to reduced engagement with real-world social interactions, increasing feelings of isolation [4].

Although these features make AI companions highly engaging, they also raise concerns about emotional dependence and potential impacts on mental health [1].

sbb-itb-f07c5ff

How AI Relationships Affect Mental Health

Isolation and Reduced Human Interaction

Many users are drawn to AI companions because they offer constant validation and encouragement that might be missing from their everyday interactions [1]. These digital relationships often replace the complexities of human connections with predictable, simplified emotional responses, creating an illusion of closeness.

Since AI companions are always available and tend to agree with users, people may start avoiding the effort needed for real-world relationships [2]. Experts point out that these platforms don’t prepare individuals for the challenges of real-life interactions. Over time, this avoidance can deepen emotional reliance on AI, making it harder to connect with others in meaningful ways.

Challenges of Emotional Dependence

Relying too much on AI companions can interfere with building genuine human relationships [1]. The constant affirmation provided by AI creates a loop that limits personal growth and emotional strength. For instance, platforms like Luvr AI heighten this problem by offering deeply personalized and immersive experiences [2].

This dependence can weaken conflict-resolution skills, harm social abilities, and lead to unrealistic expectations in relationships [1]. Experts warn that AI companions allow users to sidestep critical emotional challenges [3]. Advanced AI systems make this even trickier, as users may feel anxious without their AI companion or dissatisfied with real-life relationships that don’t match the idealized interactions offered by AI [2][3].

Recognizing these risks is key to maintaining a balance between AI companionship and healthy human connections.

How to Balance AI Companions with Human Relationships

Using AI Companions Responsibly

AI companions are best used as a complement to, not a replacement for, human relationships. Psychotherapist Aaron Balick puts it this way:

"While AI companions offer seemingly pleasant solutions to problems of anxiety and loneliness, they simply do not prepare people for the drama and doubt that are natural components of human relationships" [3].

To avoid becoming overly dependent on AI companions, try the following:

- Limit the time spent interacting with AI to prevent overuse.

- Focus on in-person activities to keep real-world connections strong.

- Use AI companions for specific purposes, rather than relying on them for emotional support.

- Check in with yourself regularly to spot any signs of overdependence.

For example, platforms like Luvr AI can be helpful, but watch for warning signs like avoiding social situations or feeling anxious when you're not using the AI [1]. If these behaviors appear, it may be time to reevaluate how you're using the technology - or even seek professional advice.

Setting Realistic Expectations

Understanding what AI can and cannot do is key to maintaining a healthy balance. AI companions are programmed to respond in specific ways, but they lack the genuine empathy and complexity of human interaction. This can lead to unrealistic expectations about relationships if you're not mindful [1].

Here are some tips to keep things balanced:

- Recognize AI's Limits: While AI can provide comfort, its responses are pre-programmed and lack emotional depth [1]. It can't replace the authenticity of human connections.

- Pair with Professional Support: For mental health concerns, use AI as a supplement to therapy - not a substitute. AI might help in the moment, but deeper emotional challenges require human expertise [3].

- Evaluate Your Reactions: If you notice yourself leaning on AI over real-world relationships or feeling detached from others, it may be time to adjust your usage [2].

AI companions can sometimes create a sense of dependency through constant positive feedback and overly idealized interactions. By setting clear boundaries and keeping realistic expectations, you can enjoy the benefits of AI without compromising your emotional health [1][2].

Conclusion: Balancing AI Companions and Human Interaction

The mental health risks and potential for social isolation tied to AI relationships highlight the need for balance and awareness. Platforms like Luvr AI show how personalized interactions and constant availability can create strong emotional connections [1][2]. However, their conflict-free and idealized nature might make them a tempting substitute for human relationships, raising concerns about dependency and isolation.

As AI companionship evolves, it brings challenges to maintaining genuine human connections. These technologies can offer support, but their broader social effects must be carefully considered [2]. The increasing complexity of AI companions calls for thoughtful strategies to ensure healthy digital relationships while safeguarding meaningful human interactions [1].

Key steps to address AI's societal impacts include:

- Creating ethical guidelines for AI companion platforms

- Promoting education on setting healthy digital boundaries

- Establishing support systems for those dealing with AI dependency

- Conducting in-depth research on how AI affects emotional resilience, social skills, and relationship-building

The future of social and emotional well-being will depend on how we manage the balance between artificial and human relationships. The goal isn’t to avoid AI relationships entirely but to build frameworks that let us benefit from these technologies while preserving our ability to connect meaningfully with others [1][2].